The algorithm problem: beyond human calculation. From the article:

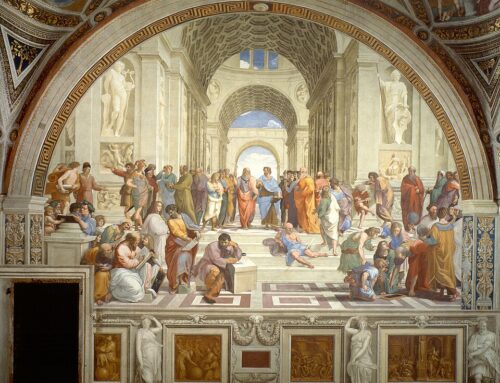

Throughout their history, algorithms have been built to solve problems. They have been used to make astronomical calculations, build clocks and turn secret information into code. “Up till the nineties,” the researcher said, “algorithms still tended to be RSAs – Really Simple Algorithms. Previously it was pretty clear how stuff happened. You take the original Google algorithm. It was basically a popularity study. You’d just surface (or rank more highly) things that people clicked on more. In general, the people who made it understood how the thing worked.” Some algorithms were more complicated than others, but the input > process > output was generally transparent and understandable, at least to the people who built and used them….

Algorithms have changed, from Really Simple to Ridiculously Complicated. They are capable of accomplishing tasks and tackling problems that they’ve never been able to do before. They are able, really, to handle an unfathomably complex world better than a human can. But exactly because they can, the way they work has become unfathomable too. Inputs loop from one algorithm to the next; data presses through more instructions, more code. The complexity, dynamism, the sheer not-understandability of the algorithm means that there is a middle part – between input and output – where it is possible that no one knows exactly what they’re doing. The algorithm learns whatever it learns. “The reality is, professionally, I only look under the hood when it goes wrong. And it can be physically impossible to understand what has actually happened.”…

“You’ve seen how difficult it is to really understand. Sometimes I struggle with it, and I created it. The reality is that if the algorithm looks like it’s doing the job that it’s supposed to do, and people aren’t complaining, then there isn’t much incentive to really comb through all those instructions and those layers of abstracted code to work out what is happening.” The preferences you see online – the news you read, the products you view, the adverts that appear – are all dependent on values that don’t necessarily have to be what they are. They are not true, they’ve just passed minimum evaluation criteria….

“Weapons of math destruction” is how the writer Cathy O’Neil describes the nasty and pernicious kinds of algorithms that are not subject to the same challenges that human decision-makers are. Parole algorithms (not Jure’s) can bias decisions on the basis of income or (indirectly) ethnicity. Recruitment algorithms can reject candidates on the basis of mistaken identity. In some circumstances, such as policing, they might create feedback loops, sending police into areas with more crime, which causes more crime to be detected….

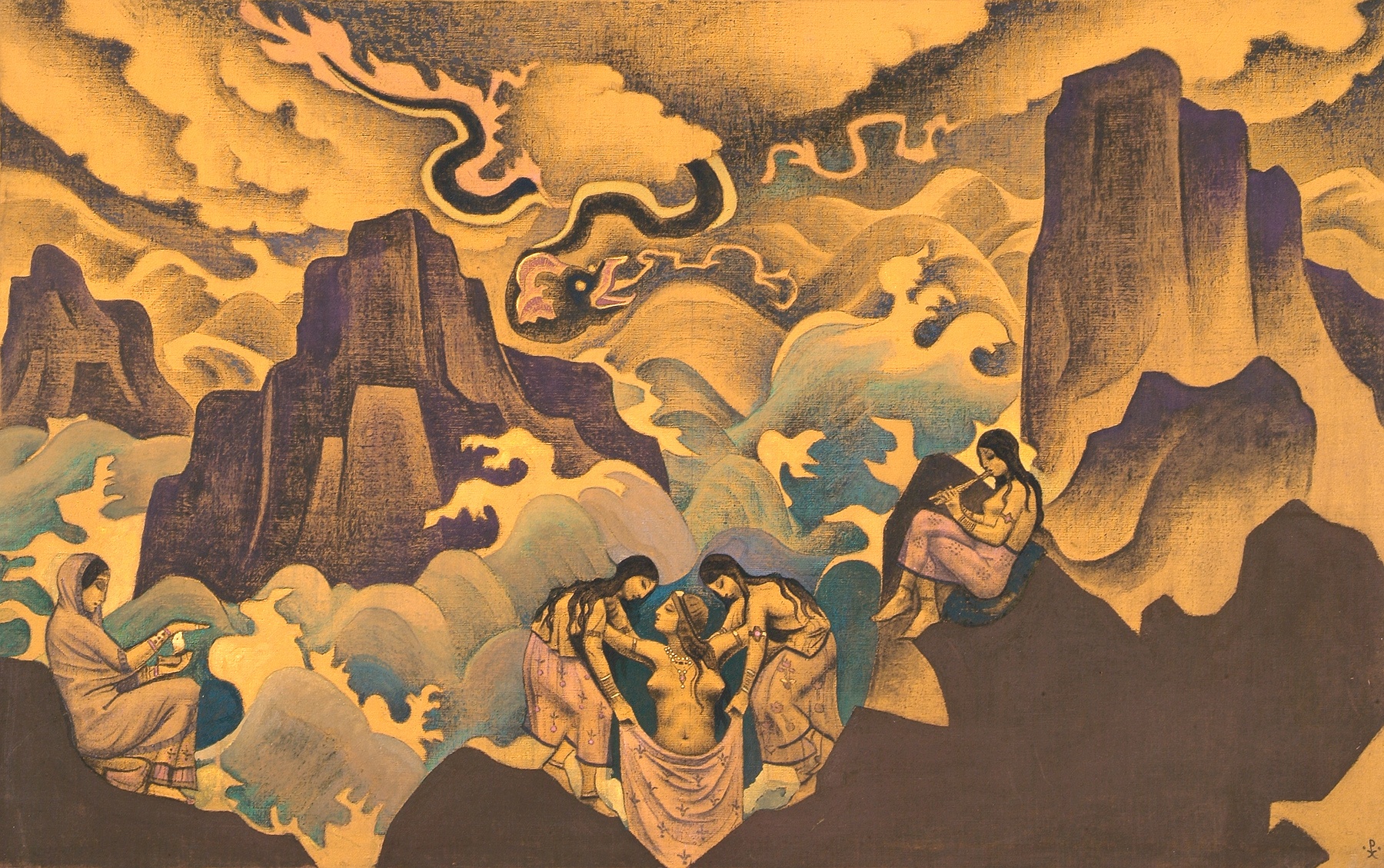

There is something happening here that is deeper than any single algorithm. They are at the forefront of what, at times, appears to be almost a new philosophy. “God is the machine,” the researcher told me. “The black box is the truth. If it works, it works. We shouldn’t even try to work out what the machine is spitting out – they’ll pick up patterns we won’t even know about.”

h/t Pocket Hits

Leave A Comment