AI rules: the new frontier of ethics and technology. From the article:

The more programmers push their machines to make smart decisions that surprise and delight us, the more they risk triggering something unexpected and awful.

The invention of the internet took most philosophers by surprise. This time, A.I. ethicists view it as their job to keep up….

A.I. ethicists consult with schools, businesses and governments. They train tech entrepreneurs to think about questions like the following. Should tech companies that collect and analyze DNA data be allowed to sell that data to pharmaceutical firms in order to save lives? Is it possible to write code that offers guidance on whether to approve life insurance or loan applications in an ethical way? Should the government ban realistic sex robots that could tempt vulnerable people into thinking they are in the equivalent of a human relationship? How much should we invest in technology that throws millions of people out of work?

Tech companies themselves are steering more resources into ethics, and tech leaders are thinking seriously about the impact of their inventions….

In June, for example, Google, seeking to reassure the public and regulators, published a list of seven principles for guiding its A.I. applications. It said that A.I. should be socially beneficial, avoid creating or reinforcing unfair bias, be built and tested for safety, be accountable to people, incorporate privacy design principles, uphold high standards of scientific excellence, and be made available to uses that accord with these principles.

In response, [Tae Wan Kim, an A.I. ethicist at Carnegie Mellon University in Pittsburgh], published a critical commentary on his blog. The problem with promising social benefits, for example, is that “Google can take advantage of local norms,” he wrote. “If China allows, legally, Google to use AI in a way that violates human rights, Google will go for it.” (At press time, Google had not responded to multiple requests for comment on this criticism.)

The biggest headache for A.I. ethicists is that a global internet makes it harder to enforce any universal principle like freedom of speech. The corporations are, for the most part, in charge. That is especially true when it comes to deciding how much work we should let machines do….

And as programmers try to make this type of reasoning possible for machines, invariably they base their algorithms on data derived from human behavior. In a fallen world, that’s a problem.

“There’s a risk of A.I. systems being used in ways that amplify unjust social biases,” says [Shannon] Vallor, the philosopher at Santa Clara University. “If there’s a pattern, A.I. will amplify that pattern.”

Loan, mortgage or insurance applications could be denied at higher rates for marginalized social groups if, for example, the algorithm looks at whether there is a history of homeownership in the family. A.I. ethicists do not necessarily advocate programming to carry out affirmative action, but they say the risk is that A.I. systems will not correct for previous patterns of discrimination.

Ethicists are also concerned that relying on A.I. to make life-altering decisions cedes even more influence than they already have to corporations that collect, buy and sell private data, as well as to governments that regulate how the data can be used. In one dystopian scenario, a government could deny health care or other public benefits to people deemed to engage in “bad” behavior, based on the data recorded by social media companies and gadgets like Fitbit.

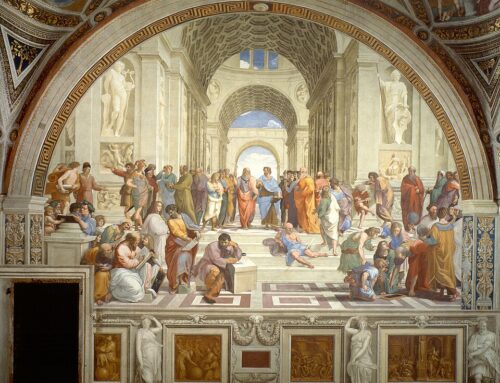

Every artificial intelligence program is based on how a particular human views the world, says [Brian] Green, the ethicist at Santa Clara. “You can imitate so many aspects of humanity,” he says, “but what quality of people are you going to copy?”

h/t @FullArtIntel

Leave A Comment