A recent United Kingdom survey reports that 63% of the adult population is uncomfortable with allowing personal data to be used to improve healthcare and is unfavorable to artificial intelligence (AI) systems replacing doctors and nurses in tasks they usually perform. Another study, conducted in Germany, found that medical students—the doctors of tomorrow—overwhelmingly buy into the promise of AI to improve medicine (83%) but are more skeptical that it will establish conclusive diagnoses in, for instance, imaging exams (56% disagree). When asked about the prospects of AI, United States decision-makers at healthcare organizations are confident that it will improve medicine, but roughly half of them think it will produce fatal errors, will not work properly, and will not meet currently hyped expectations. These survey data resonate to the ethical and regulatory challenges that surround AI in healthcare, particularly privacy, data fairness, accountability, transparency, and liability. Successfully addressing these will foster the future of machine learning in medicine (MLm) and its positive impact on healthcare. Ethical and regulatory concerns about MLm can be grouped into three broad categories according to how and where they emerge, namely, around the sources of data needed for MLm and the approaches used in the development and deployment of MLm in clinical practice….

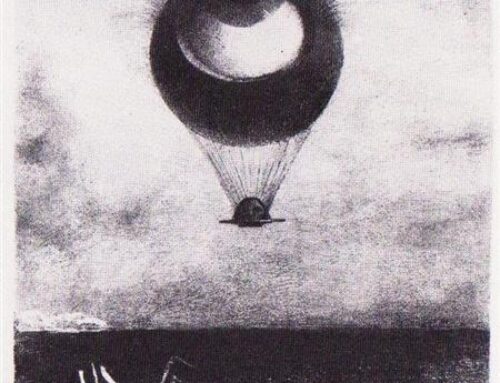

Perhaps the MLm raising the most difficult ethical and legal questions—and the greatest challenge to current modes of regulation—is represented by noninterpretable, so-called black-box algorithms, the inner logic of which remains hidden even to their developers. This lack of transparency can preclude the mechanistic interpretation of MLm-based assessments and, in turn, reduce their trustworthiness. Moreover, the disclosure of basic yet meaningful details about medical treatment to patients—a fundamental tenet of medical ethics—requires that the doctors themselves grasp at least the fundamental inner workings of the devices they use.

h/t FullArtIntel

Leave A Comment