As activists, researchers, and journalists voice concerns over the rise of artificial intelligence, warning against biased, deceptive and malicious applications, the companies building this technology are responding. From tech giants like Google and Microsoft to scrappy A.I. start-ups, many are creating corporate principles meant to ensure their systems are designed and deployed in an ethical way. Some set up ethics officers or review boards to oversee these principles.

But tensions continue to rise as some question whether these promises will ultimately be kept. Companies can change course. Idealism can bow to financial pressure. Some activists — and even some companies — are beginning to argue that the only way to ensure ethical practices is through government regulation….

As companies and governments deploy these A.I. technologies, researchers are also realizing that some systems are woefully biased. Facial recognition services, for instance, can be significantly less accurate when trying to identify women or someone with darker skin. Other systems may include security holes unlike any seen in the past. Researchers have shown that driverless cars can be fooled into seeing things that are not really there.

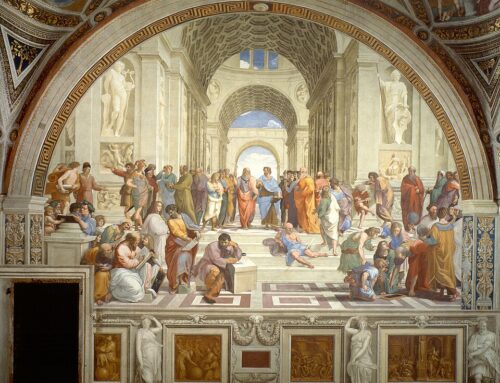

All this means that building ethical artificial intelligence is an enormously complex task. It gets even harder when stakeholders realize that ethics are in the eye of the beholder.

As some Microsoft employees protest the company’s military contracts, Mr. Smith said that American tech companies had long supported the military and that they must continue to do so. “The U.S. military is charged with protecting the freedoms of this country,” he told the conference. “We have to stand by the people who are risking their lives.”…

“You functionally have situations where the foxes are guarding the henhouse,” said Liz Fong-Jones, a former Google employee who left the company late last year.

In 2014, when Google acquired DeepMind, perhaps the world’s most important A.I. lab, the company agreed to set up an external review board that would ensure the lab’s research would not be used in military applications or otherwise unethical projects. But five years later, it is still unclear whether this board even exists.

Google, Microsoft, Facebook and other companies have created organizations like the Partnership on A.I. that aim to guide the practices of the entire industry. But these operations are largely toothless.

For other posts on AI and ethics, see here.

Leave A Comment