Artificial awareness: summarizing the debate over human consciousness and AI. From the article:

Will AI ever be conscious? As with all things consciousness-related, the answer is that nobody really knows at this point, and many think that it may be objectively impossible for us to understand if the slippery phenomenon ever does show up in a machine….

The possibility that we might mistakenly infer consciousness on the basis of outward behavior is not an absurd proposition. It’s conceivable that, once we succeed in building artificial general intelligence—the kind that isn’t narrow like everything out there right now—that can adapt and learn and apply itself in a wide range of contexts, the technology will feel conscious to us, regardless of whether it actually is or not.

Imagine a sort of Alexa or Siri on steroids, a program that you can converse with, that is adept as any human at communicating with varied intonation and creative wit. The line quickly blurs….

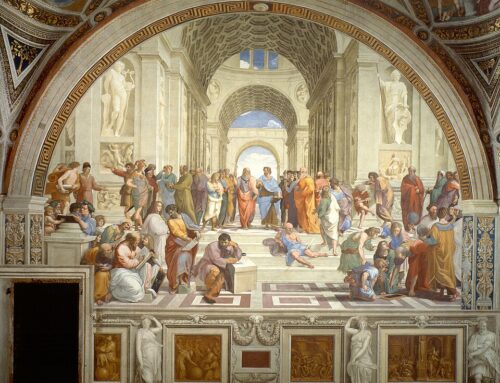

[Douglas] Hofstadter’s perspective is critical. If these hyper-capable algorithms aren’t built with a proper understanding of our own minds informing them, an understanding that is still very much inchoate, how could we know if they attain conscious intelligence? More pressingly, without a clear understanding of the phenomenon of consciousness, will charging into the future with this technology create more problems than it solves?…

It’s certainly possible that the scales are tipping in favor of those who believe AGI will be achieved sometime before the century is out. In 2013, Nick Bostrom of Oxford University and Vincent Mueller of the European Society for Cognitive Systems published a survey in Fundamental Issues of Artificial Intelligence that gauged the perception of experts in the AI field regarding the timeframe in which the technology could reach human-like levels.

The report reveals “a view among experts that AI systems will probably (over 50%) reach overall human ability by 2040-50, and very likely (with 90% probability) by 2075.”…

Futurist Ray Kurzweil, the computer scientist behind music-synthesizer and text-to-speech technologies, is a believer in the fast approach of the singularity as well…. [A]s he says in a recent talk with Society for Science, humanity will merge with the technology it has created, uploading our minds to the cloud. As admirable as that optimism is, this seems unlikely, given our newly-forming understanding of the brain and its relationship to consciousness.

Christof Koch, an early advocate of the push to identify the physical correlates of consciousness, takes a more grounded approach while retaining some of the optimism for human-like AI appearing in the near future. Writing in Scientific American in 2019, he says, “Rapid progress in coming decades will bring about machines with human-level intelligence capable of speech and reasoning, with a myriad of contributions to economics, politics and, inevitably, warcraft.”…

The interweaving of consciousness and AI represent something of a civilizational, high-wire balancing act. There may be no other fields of scientific inquiry in which we are so quickly advancing while having so little an idea of what we’re potentially doing.

If we manage, whether by intent or accident, to create machines that experience the world subjectively, the ethical implications would be monumental. It would also be a watershed moment for our species, and we would have to grapple with what it means to have essentially created new life. Whether these remain a distant possibility or await us just around the corner, we would do well to start considering them more seriously.

For other posts on artificial intelligence, see here.

Leave A Comment